1 准备 K230 SDK和工具链

从 Gitee 上下载最新的 SDK

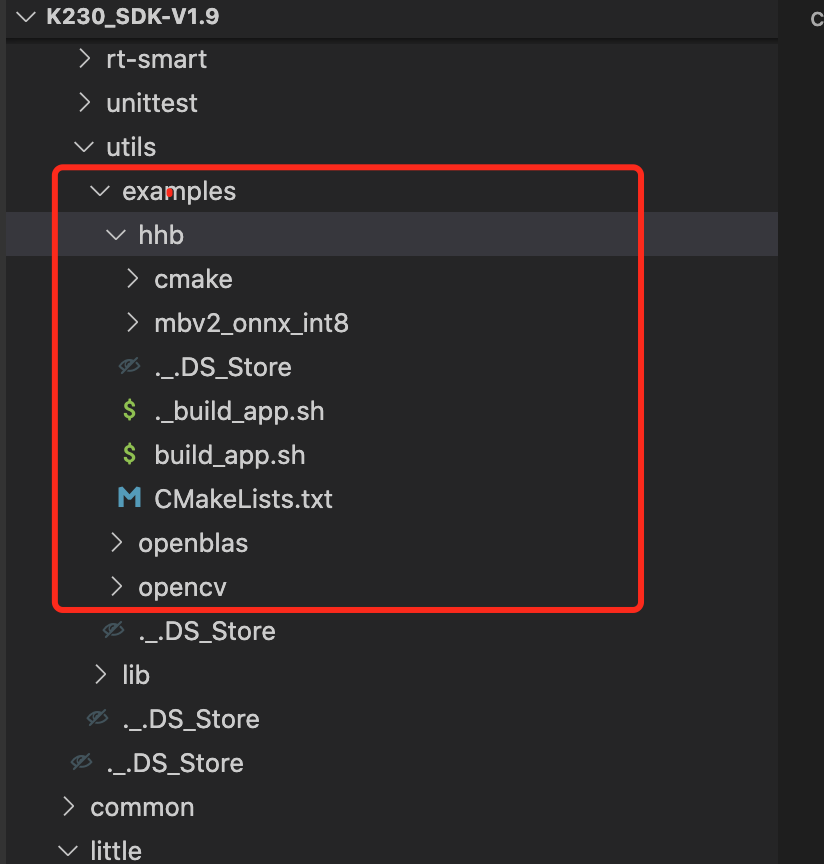

2 参照 HHB 的 demo 来实现自定义的模型,参照的 demo 在大核里面,如下图

参照官方的方法获取 hhb 的 docker image

tar xzf hhb-2.2.35.docker.tar.gz

cd hhb-2.2.35.docker/

docker load < hhb.2.2.35.img.tar

./start_hhb.sh

参照官方的方法,编译模型

准备自定义数据集

创建数据集脚本

import os

import shutil

from sklearn.model_selection import train_test_split

def create_dataset_structure(base_path, classes):

os.makedirs(base_path, exist_ok=True)

for split in ['train', 'val', 'test']:

split_path = os.path.join(base_path, split)

os.makedirs(split_path, exist_ok=True)

for class_name in classes:

class_path = os.path.join(split_path, class_name)

os.makedirs(class_path, exist_ok=True)

print(f"数据集结构已在 {base_path} 创建完成")

if name == "main":

classes = ["cat"]

create_dataset_structure("custom_dataset", classes)

数据增强和预处理脚本

from torchvision import transforms

from torch.utils.data import DataLoader

import torchvision.datasets as datasets

import os

def get_data_transforms(input_size=224):

train_transforms = transforms.Compose([

transforms.RandomResizedCrop(input_size),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(10),

transforms.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.2, hue=0.1),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

val_transforms = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(input_size),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

return train_transforms, val_transforms

def create_data_loaders(data_dir, batch_size=32, input_size=224):

train_transforms, val_transforms = get_data_transforms(input_size)

train_dataset = datasets.ImageFolder(

os.path.join(data_dir, 'train'),

transform=train_transforms

)

val_dataset = datasets.ImageFolder(

os.path.join(data_dir, 'val'),

transform=val_transforms

)

test_dataset = datasets.ImageFolder(

os.path.join(data_dir, 'test'),

transform=val_transforms

)

train_loader = DataLoader(

train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=4,

pin_memory=True

)

val_loader = DataLoader(

val_dataset,

batch_size=batch_size,

shuffle=False,

num_workers=4,

pin_memory=True

)

test_loader = DataLoader(

test_dataset,

batch_size=batch_size,

shuffle=False,

num_workers=4,

pin_memory=True

)

return train_loader, val_loader, test_loader, train_dataset.classes

if name == "main":

data_dir = "custom_dataset"

train_loader, val_loader, test_loader, classes = create_data_loaders(data_dir)

print(f"类别: {classes}")

print(f"训练集大小: {len(train_loader.dataset)}")

print(f"验证集大小: {len(val_loader.dataset)}")

print(f"测试集大小: {len(test_loader.dataset)}")

模型训练脚本

import torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

import torchvision.models as models

from torch.utils.tensorboard import SummaryWriter

import time

import copy

import os

def train_model(model, dataloaders, criterion, optimizer, scheduler, num_epochs=25, device='cuda'):

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

writer = SummaryWriter()

for epoch in range(num_epochs):

print(f'Epoch {epoch}/{num_epochs - 1}')

print('-' * 10)

for phase in ['train', 'val']:

if phase == 'train':

model.train()

else:

model.eval()

running_loss = 0.0

running_corrects = 0

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

if phase == 'train':

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

if phase == 'train':

scheduler.step()

epoch_loss = running_loss / len(dataloaders[phase].dataset)

epoch_acc = running_corrects.double() / len(dataloaders[phase].dataset)

print(f'{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

writer.add_scalar(f'Loss/{phase}', epoch_loss, epoch)

writer.add_scalar(f'Accuracy/{phase}', epoch_acc, epoch)

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print()

time_elapsed = time.time() - since

print(f'训练完成于 {time_elapsed // 60:.0f}分 {time_elapsed % 60:.0f}秒')

print(f'最佳验证准确率: {best_acc:.4f}')

model.load_state_dict(best_model_wts)

writer.close()

return model

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(f"使用设备: {device}")

data_dir = "custom_dataset"

train_loader, val_loader, _, classes = create_data_loaders(data_dir)

dataloaders = {'train': train_loader, 'val': val_loader}

model = models.mobilenet_v2(pretrained=True)

num_features = model.classifier[1].in_features

model.classifier[1] = nn.Linear(num_features, len(classes))

model = model.to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

scheduler = lr_scheduler.StepLR(optimizer, step_size=7, gamma=0.1)

model = train_model(model, dataloaders, criterion, optimizer, scheduler, num_epochs=25)

torch.save(model.state_dict(), 'custom_model.pth')

print("模型已保存为 custom_model.pth")

with open('class_names.txt', 'w') as f:

for class_name in classes:

f.write(f"{class_name}\n")

print("类别信息已保存为 class_names.txt")

if name == "main":

main()

转换 ONNX 格式

import torch

import torchvision.models as models

import torch.nn as nn

def convert_to_onnx(model_path, class_names_path, onnx_path, input_size=224):

with open(class_names_path, 'r') as f:

classes = [line.strip() for line in f.readlines()]

model = models.mobilenet_v2(pretrained=False)

num_features = model.classifier[1].in_features

model.classifier[1] = nn.Linear(num_features, len(classes))

model.load_state_dict(torch.load(model_path))

model.eval()

dummy_input = torch.randn(1, 3, input_size, input_size)

torch.onnx.export(

model,

dummy_input,

onnx_path,

export_params=True,

opset_version=11,

do_constant_folding=True,

input_names=['input'],

output_names=['output'],

dynamic_axes={'input': {0: 'batch_size'}, 'output': {0: 'batch_size'}}

)

print(f"模型已成功转换为ONNX格式: {onnx_path}"

if name == "main":

convert_to_onnx(

model_path="custom_model.pth",

class_names_path="class_names.txt",

onnx_path="custom_model.onnx",

input_size=224

)

数据校准脚本

import os

import shutil

import random

from PIL import Image

import torchvision.transforms as transforms

def prepare_calibration_data(dataset_path, output_path, num_images=100):

os.makedirs(output_path, exist_ok=True)

all_images = []

for split in ['train', 'val']:

split_path = os.path.join(dataset_path, split)

for class_name in os.listdir(split_path):

class_path = os.path.join(split_path, class_name)

if os.path.isdir(class_path):

for img_name in os.listdir(class_path):

if img_name.lower().endswith(('.png', '.jpg', '.jpeg')):

all_images.append(os.path.join(class_path, img_name))

selected_images = random.sample(all_images, min(num_images, len(all_images)))

preprocess = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

for i, img_path in enumerate(selected_images):

img = Image.open(img_path).convert('RGB')

img_tensor = preprocess(img)

output_file = os.path.join(output_path, f"calib_{i:04d}.bin")

with open(output_file, 'wb') as f:

f.write(img_tensor.numpy().tobytes())

print(f"已准备 {len(selected_images)} 张校准图像到 {output_path}")

if name == "main":

prepare_calibration_data(

dataset_path="custom_dataset",

output_path="calibration_data",

num_images=100

)

使用 HHB进行编译和量化

#!/bin/bash

docker run -it --rm

-v $(pwd):/home/alientek/k230_sdk-v1.9

cannane/hhb:2.4.1

/bin/bash -c "

cd /home/alientek/k230_sdk-v1.9

hhb --model-file custom_model.onnx

--data-scale 0.003921568627451 \ # 1/255

--data-mean '124 116 104' \ # 均值 (0.485 * 255, 0.456 * 255, 0.406 * 255)

--board k230

--input-shape '1 3 224 224'

--quantization-scheme 'int8_sym'

--calibrate-dataset calibration_data

--output custom_model_quantized

echo 'HHB量化完成'

参照官方文档运行 demo

根据官方的 demo 来修改

更多回帖