感谢电子发烧友论坛和算能提供的Milk-V Duo 开发板试用机会。

上次我们介绍了OpenCV图像处理库的移植,这次我们尝试将RoboMaster机器人装甲板识别的程序移植到板子上,测试开发板的图像处理能力。

装甲板识别算法简介

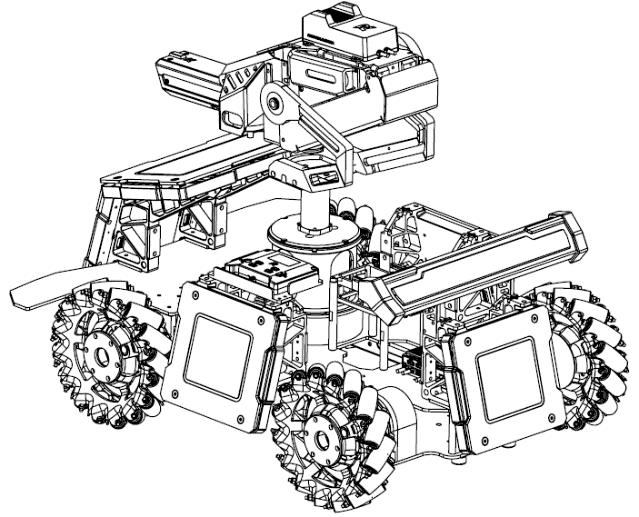

下图是装上装甲板后的RoboMaster步兵轴测图。装甲板竖直固定在小车的四周,同一装甲板两灯条平行、灯条长宽确定、两灯条间的间距确定。

装甲板识别的算法已经非常成熟,其基本思想是先利用阈值分割、膨胀等算法对图像中的灯条进行识别,再根据长宽比、面积大小和凸度来筛选灯条,对找出的灯条进行匹配找到合适的配对,并将配对灯条作为候选装甲板,提取其中间的图案判断是否是数字进行筛选。详细的介绍可以参考:https://blog.csdn.net/u010750137/article/details/96428059。

代码移植注意事项

Milk-V Duo处理板的CPU主频达到1GHz,但是其RAM只有64MB,远少于一般Linux系统,所以在移植程序时必须非常小心内存的占用。我们的程序每次仅处理一帧图像,而且在处理过程中尽量减少对图像的Clone,处理的内存稍微多一点就可能导致程序失败。

在视频处理方面,我们没有采用OpenCV的VideoCapture和VideoWrite类,这两个类的处理都非常耗用内存,再加上我们的视频分辨率较高(1280×1024),处理不了几帧就会出错。我们采用的是将视频以图片序列进行存储,这样可以保障每次处理的内存降到最少。

核心的代码如下:

#include<iostream>

#include<opencv2/opencv.hpp>

#include<opencv2/imgproc/types_c.h>

#include<vector>

#include "ArmorParam.h"

#include "ArmorDescriptor.h"

#include "LightDescriptor.h"

#include <sys/time.h>

using namespace std;

using namespace cv;

template<typename T>

float distance(const cv::Point_<T>& pt1, const cv::Point_<T>& pt2)

{

return std::sqrt(std::pow((pt1.x - pt2.x), 2) + std::pow((pt1.y - pt2.y), 2));

}

class ArmorDetector

{

public:

void init(int selfColor){

if(selfColor == RED){

_enemy_color = BLUE;

_self_color = RED;

}

}

void loadImg(Mat& img ){

_srcImg = img;

Rect imgBound = Rect(cv::Point(50, 50), Point(_srcImg.cols - 50, _srcImg.rows- 50) );

_roi = imgBound;

_roiImg = _srcImg(_roi).clone();

}

int detect(){

_grayImg = separateColors();

int brightness_threshold = 120;

Mat binBrightImg;

threshold(_grayImg, binBrightImg, brightness_threshold, 255, cv::THRESH_BINARY);

Mat element = cv::getStructuringElement(cv::MORPH_ELLIPSE, cv::Size(3, 3));

dilate(binBrightImg, binBrightImg, element);

vector<vector<Point> > lightContours;

findContours(binBrightImg.clone(), lightContours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE);

_debugImg = _roiImg.clone();

for(size_t i = 0; i < lightContours.size(); i++){

drawContours(_debugImg,lightContours, i, Scalar(0,0,255), 3, 8);

}

vector<LightDescriptor> lightInfos;

filterContours(lightContours, lightInfos);

if(lightInfos.empty()){

return -1;

}

drawLightInfo(lightInfos);

_armors = matchArmor(lightInfos);

if(_armors.empty()){

return -1;

}

for(size_t i = 0; i < _armors.size(); i++){

vector<Point2i> points;

for(int j = 0; j < 4; j++){

points.push_back(Point(static_cast<int>(_armors[i].vertex[j].x), static_cast<int>(_armors[i].vertex[j].y)));

}

polylines(_debugImg, points, true, Scalar(0, 255, 0), 3, 8, 0);

}

return 0;

}

Mat separateColors(){

vector<Mat> channels;

split(_roiImg,channels);

Mat grayImg;

if(_enemy_color==RED){

grayImg=channels.at(2)-channels.at(0);

}

else{

grayImg=channels.at(0)-channels.at(2);

}

return grayImg;

}

void filterContours(vector<vector<Point> >& lightContours, vector<LightDescriptor>& lightInfos){

for(const auto& contour : lightContours){

float lightContourArea = contourArea(contour);

if(lightContourArea < _param.light_min_area) continue;

RotatedRect lightRec = fitEllipse(contour);

adjustRec(lightRec);

if(lightRec.size.width / lightRec.size.height >_param.light_max_ratio ||

lightContourArea / lightRec.size.area() <_param.light_contour_min_solidity)

continue;

lightRec.size.width *= _param.light_color_detect_extend_ratio;

lightRec.size.height *= _param.light_color_detect_extend_ratio;

lightInfos.push_back(LightDescriptor(lightRec));

}

}

void drawLightInfo(vector<LightDescriptor>& LD){

vector<std::vector<cv::Point> > cons;

int i = 0;

for(auto &lightinfo: LD){

RotatedRect rotate = lightinfo.rec();

auto vertices = new cv::Point2f[4];

rotate.points(vertices);

vector<Point> con;

for(int i = 0; i < 4; i++){

con.push_back(vertices[i]);

}

cons.push_back(con);

drawContours(_debugImg, cons, i, Scalar(0,255,255), 3, 8);

i++;

}

}

vector<ArmorDescriptor> matchArmor(vector<LightDescriptor>& lightInfos){

vector<ArmorDescriptor> armors;

sort(lightInfos.begin(), lightInfos.end(), [](const LightDescriptor& ld1, const LightDescriptor& ld2){

return ld1.center.x < ld2.center.x;

});

for(size_t i = 0; i < lightInfos.size(); i++){

for(size_t j = i + 1; (j < lightInfos.size()); j++){

const LightDescriptor& leftLight = lightInfos[i];

const LightDescriptor& rightLight = lightInfos[j];

float angleDiff_ = abs(leftLight.angle - rightLight.angle);

float LenDiff_ratio = abs(leftLight.length - rightLight.length) / max(leftLight.length, rightLight.length);

if(angleDiff_ > _param.light_max_angle_diff_ ||

LenDiff_ratio > _param.light_max_height_diff_ratio_){

continue;

}

float dis = distance(leftLight.center, rightLight.center);

float meanLen = (leftLight.length + rightLight.length) / 2;

float yDiff = abs(leftLight.center.y - rightLight.center.y);

float yDiff_ratio = yDiff / meanLen;

float xDiff = abs(leftLight.center.x - rightLight.center.x);

float xDiff_ratio = xDiff / meanLen;

float ratio = dis / meanLen;

int cnt = 0;

cnt++;

if(yDiff_ratio > _param.light_max_y_diff_ratio_ ||

xDiff_ratio < _param.light_min_x_diff_ratio_ ||

ratio > _param.armor_max_aspect_ratio_ ||

ratio < _param.armor_min_aspect_ratio_){

continue;

}

int armorType = ratio > _param.armor_big_armor_ratio ? BIG_ARMOR : SMALL_ARMOR;

float ratiOff = (armorType == BIG_ARMOR) ? max(_param.armor_big_armor_ratio - ratio, float(0)) : max(_param.armor_small_armor_ratio - ratio, float(0));

float yOff = yDiff / meanLen;

float rotationScore = -(ratiOff * ratiOff + yOff * yOff);

ArmorDescriptor armor(leftLight, rightLight, armorType, _grayImg, rotationScore, _param);

armors.emplace_back(armor);

break;

}

}

return armors;

}

void adjustRec(cv::RotatedRect& rec)

{

using std::swap;

float& width = rec.size.width;

float& height = rec.size.height;

float& angle = rec.angle;

while(angle >= 90.0) angle -= 180.0;

while(angle < -90.0) angle += 180.0;

if(angle >= 45.0)

{

swap(width, height);

angle -= 90.0;

}

else if(angle < -45.0)

{

swap(width, height);

angle += 90.0;

}

}

cv::Mat _debugImg;

private:

int _enemy_color;

int _self_color;

cv::Rect _roi;

cv::Mat _srcImg;

cv::Mat _roiImg;

cv::Mat _grayImg;

vector<ArmorDescriptor> _armors;

ArmorParam _param;

};

int main(int argc, char *argv[])

{

std::string img_folder = "/media/user/png/";

std::string out_folder = "/media/user/output/";

long frameToStart = 1;

cout << "从第" << frameToStart << "帧开始读" << endl;

int frameTostop = 100;

if (frameTostop < frameToStart)

{

cout << "结束帧小于开始帧,错误" << endl;

}

int count = 0;

Mat img;

int i = frameToStart;

struct timeval start, end;

double elapsed_time;

while(i < frameTostop)

{

Mat frame;

count++;

std::string img_path = img_folder + "output_" + std::to_string(i) + ".png";

img = imread(img_path );

if (img.empty())

{

std::cerr << "无法读取图像文件 " << img_path << std::endl;

return -1;

}

gettimeofday(&start, NULL);

ArmorDetector detector;

detector.init(RED);

detector.loadImg(img);

detector.detect();

gettimeofday(&end, NULL);

elapsed_time = (end.tv_sec - start.tv_sec) + (end.tv_usec - start.tv_usec) / 1000000.0;

img_path = out_folder + "result_" + std::to_string(i) + ".png";

imwrite(img_path, detector._debugImg);

i++;

cout << count << ":" << elapsed_time << endl;

}

}

测试结果

测试结果视频见B站:https://www.bilibili.com/video/BV1gz4y1t7QB/。

![装甲板识别00-03-25[20230728-20165540].jpg](//file1.elecfans.com/web2/M00/8E/4B/wKgaomTDseOAHqtsAAE1LGFLwjw964.jpg)

我们采用的原始视频是每秒12帧。在处理过程中,我们打印输出了每帧的处理时间:

[root@milkv]/media/user

从第1帧开始读

1:0.304027

2:0.313252

3:0.314704

4:0.407992

5:0.357159

6:0.324584

7:0.322873

8:0.333659

9:0.312911

10:0.313014

11:0.318744

12:0.574995

13:0.366383

14:0.311588

15:0.414218

16:0.354194

17:0.33607

18:0.314957

19:0.501529

20:0.364166

21:0.394598

22:0.44688

从处理结果看,视频中装甲板的检测效果和电脑上并没有差别,但是处理速度明显较慢,大概每秒3帧的样子,不能达到实时检测的要求。Milk-V Duo处理板的处理能力可能更适合摄像头采集并压缩传输的场景,对于视频检测这类的算法,如果实时性要求低的话,尚可一试。

/9

/9