之前5期的介绍中我们已经成功逐一实现了功能。本篇为最后一期,我们将整合所有功能,实现完整项目。

由于所有的模型一起运行,对内存要求比较大。因此我们先要调整一下系统的内存分配:

wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/v0.1.0/memory_edit_V1.6.1.deb

sudo apt install ./memory_edit_V1.6.1.deb

memory_edit.sh -c -npu 7615 -vpu 1024 -vpp 4096 bm1684x_sm7m_v1.0.dtb

sudo cp /opt/sophon/memory_edit/emmcboot.itb /boot/emmcboot.itb && sync

sudo reboot

之前每个单独的功能模块测试时,我们都使用了独立的虚拟环境。现在所有环境放在一起,难免会出现一些冲突的情况。经过测试,我实现了在同一个环境中运行所有模块。由于安装包非常多,所以使用requirement进行安装。先创建requirements.txt,然后写入以下内容:

aiofiles==23.2.1

aiohttp==3.9.5

aiosignal==1.3.1

altair==5.3.0

annotated-types==0.7.0

anyio==4.4.0

async-timeout==4.0.3

attrs==23.2.0

audioread==3.0.1

bypy==1.8.5

certifi==2024.7.4

cffi==1.16.0

chardet==3.0.4

charset-normalizer==3.3.2

click==8.1.7

coloredlogs==15.0.1

contourpy==1.1.1

cycler==0.12.1

Cython==3.0.10

dbus-python==1.2.16

decorator==5.1.1

dfss==1.7.8

dill==0.3.8

Distance==0.1.3

distro==1.4.0

distro-info===0.23ubuntu1

dnspython==2.6.1

dtw-python==1.5.1

email-validator==2.2.0

et-xmlfile==1.1.0

exceptiongroup==1.2.2

fastapi==0.111.1

fastapi-cli==0.0.4

ffmpy==0.3.2

filelock==3.15.4

Flask==2.2.2

flatbuffers==24.3.25

fonttools==4.53.1

frozenlist==1.4.1

fsspec==2024.6.1

g2p-en==2.1.0

gradio==3.39.0

gradio-client==1.1.0

h11==0.14.0

httpcore==1.0.5

httpx==0.27.0

huggingface-hub==0.23.4

humanfriendly==10.0

idna==3.7

importlib-metadata==8.0.0

importlib-resources==6.4.0

inflect==7.3.1

itsdangerous==2.1.2

jieba==0.42.1

jinja2==3.1.4

joblib==1.4.2

jsonschema==4.23.0

jsonschema-specifications==2023.12.1

kiwisolver==1.4.5

latex2mathml==3.77.0

lazy-loader==0.4

librosa==0.10.2.post1

linkify-it-py==2.0.3

llvmlite==0.41.1

lmdb==1.5.1

Markdown==3.6

markdown-it-py==3.0.0

MarkupSafe==2.1.5

matplotlib==3.7.5

mdit-py-plugins==0.3.3

mdtex2html==1.2.0

mdurl==0.1.2

more-itertools==10.3.0

mpmath==1.3.0

msgpack==1.0.8

multidict==6.0.5

multiprocess==0.70.16

netifaces==0.10.4

networkx==3.1

nltk==3.8.1

numba==0.58.1

numpy==1.24.4

onnx==1.14.0

onnxruntime==1.16.3

openai-whisper==20231117

opencv-python-headless==4.10.0.84

openpyxl==3.1.5

orjson==3.10.6

packaging==24.1

panda==0.3.1

pandas==2.0.3

pillow==10.4.0

pkgutil-resolve-name==1.3.10

platformdirs==4.2.2

pooch==1.8.2

protobuf==3.20.3

psutil==5.9.1

pybind11-global==2.13.1

pycparser==2.22

pydantic==2.8.2

pydantic-core==2.20.1

pydub==0.25.1

pygments==2.18.0

PyGObject==3.36.0

pymacaroons==0.13.0

PyNaCl==1.3.0

pyparsing==3.1.2

pypinyin==0.51.0

pyserial==3.5

python-apt==2.0.0

python-dateutil==2.9.0.post0

python-multipart==0.0.9

pytz==2024.1

PyYAML==6.0.1

referencing==0.35.1

regex==2024.5.15

requests==2.32.3

requests-toolbelt==1.0.0

requests-unixsocket==0.2.0

rich==13.7.1

rpds-py==0.19.0

ruff==0.5.2

safetensors==0.4.3

scikit-learn==1.3.2

scipy==1.10.1

semantic-version==2.10.0

sentencepiece==0.1.99

shellingham==1.5.4

six==1.16.0

sniffio==1.3.1

sophon-arm==3.7.0

soundfile==0.12.1

soxr==0.3.7

ssh-import-id==5.10

starlette==0.37.2

sympy==1.13.0

threadpoolctl==3.5.0

tiktoken==0.3.3

tokenizers==0.14.1

tomlkit==0.12.0

toolz==0.12.1

torch==2.3.1

torchaudio==2.3.1

tpu-perf==1.2.31

tqdm==4.66.4

transformers==4.34.1

typeguard==4.3.0

typer==0.12.3

typing-extensions==4.12.2

tzdata==2024.1

ubuntu-advantage-tools==20.3

uc-micro-py==1.0.3

unattended-upgrades==0.1

urllib3==2.2.2

uvicorn==0.30.1

websockets==11.0.3

Werkzeug==2.2.2

whisper-timestamped==1.15.4

yacs==0.1.8

yarl==1.9.4

zipp==3.19.2

接着启动项目环境,安装所有的包:

cd /data/project/jarvis

source jarvis_venv/bin/activate

pip install -r requirements.txt

接下来,需要把每个模块所需的文件从模块对应仓库中拷到项目文件夹来。由于模型文件巨大,磁盘空间不足,因此这里我全部使用剪切来移动:

mv /data/project/LLM-TPU/models/Llama3/bmodels ./

mv /data/project/LLM-TPU/models/Llama3/token_config ./

mv /data/project/LLM-TPU/support/lib_soc ./

mv /data/project/LLM-TPU/models/Llama3/python_demo/chat.cpython-38-aarch64-linux-gnu.so ./

mv /data/project/whisper-TPU_py/bmodel ./

mv /data/project/whisper-TPU_py/bmwhisper ./

mv /data/project/EmotiVoice-TPU/config ./

mv /data/project/EmotiVoice-TPU/data ./

mv /data/project/EmotiVoice-TPU/frontend ./

mv /data/project/EmotiVoice-TPU/model_file ./

mv /data/project/EmotiVoice-TPU/models ./

mv /data/project/EmotiVoice-TPU/processed ./

mv /data/project/EmotiVoice-TPU/tone_color_conversion ./

mv /data/project/Radxa-Model-Zoo/sample/YOLOv8_det/models/BM1684X ./

mv /data/project/Radxa-Model-Zoo/sample/YOLOv8_det/python/postprocess_numpy.py ./

mv /data/project/Radxa-Model-Zoo/sample/YOLOv8_det/python/utils.py ./

mkdir results

mkdir temp

至此,完成了所有的支持文件搬运。

下面,我们需要开始编写主程序main.py。主程序首先导入各个模块并初始化,然后在main函数中逐一调用所需的模块,从输入音频文件路径开始,读取该文件,用whisper进行语音识别,同时用request抓取webcam画面,使用yolov8进行图像识别。两个识别都完成后,合成问题信息,输入Llama3。Llama3回复后,使用TTS转化为语音,将该语音文件储存后,路径作为函数的返回值返回即可。

然后我们把这个main函数放入上一期搭好的FastAPI服务器中,就大功告成了。完整的代码如下:

from pipeline import Llama3

from bmwhisper.transcribe import cli, run_whisper

from yolov8_opencv import main as yolo

from yolov8_opencv import argsparser

from gr import tts_only

import requests

import argparse

from fastapi import FastAPI, UploadFile, File

from starlette.responses import FileResponse

# 初始化LLM

parser = argparse.ArgumentParser()

parser.add_argument('-m', '--model_path', type=str, default='./bmodels/llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel', help='path to the bmodel file')

parser.add_argument('-t', '--tokenizer_path', type=str, default="./token_config", help='path to the tokenizer file')

parser.add_argument('-d', '--devid', type=str, default='0', help='device ID to use')

parser.add_argument('--temperature', type=float, default=1.0, help='temperature scaling factor for the likelihood distribution')

parser.add_argument('--top_p', type=float, default=1.0, help='cumulative probability of token words to consider as a set of candidates')

parser.add_argument('--repeat_penalty', type=float, default=1.0, help='penalty for repeated tokens')

parser.add_argument('--repeat_last_n', type=int, default=32, help='repeat penalty for recent n tokens')

parser.add_argument('--max_new_tokens', type=int, default=1024, help='max new token length to generate')

parser.add_argument('--generation_mode', type=str, choices=["greedy", "penalty_sample"], default="greedy", help='mode for generating next token')

parser.add_argument('--prompt_mode', type=str, choices=["prompted", "unprompted"], default="prompted", help='use prompt format or original input')

parser.add_argument('--decode_mode', type=str, default="basic", choices=["basic", "jacobi"], help='mode for decoding')

parser.add_argument('--enable_history', action='store_true', default=True, help="if set, enables storing of history memory.")

llama_args = parser.parse_args()

model = Llama3(llama_args)

# 初始化whisper

w_model, writer, writer_args, args, temperature, loop_profile = cli(None)

# 初始化yolo

yolo_args = argsparser()

obj_dict = {0:'人',

1:'自行车',

2:'汽车',

3:'摩托车',

4:'飞机',

5:'巴士',

6:'火车',

7:'卡车',

8:'船',

9:'交通灯',

10:'消防栓',

11:'停止标志',

12:'停车收费表',

13:'长凳',

14:'鸟',

15:'猫',

16:'狗',

17:'马',

18:'羊',

19:'牛',

20:'大象',

21:'熊',

22:'斑马',

23:'长颈鹿',

24:'背包',

25:'雨伞',

26:'手提包',

27:'领带',

28:'手提箱',

29:'飞盘',

30:'滑雪板',

31:'滑雪板',

32:'运动球',

33:'风筝',

34:'棒球棒',

35:'棒球手套',

36:'滑板',

37:'冲浪板',

38:'网球拍',

39:'瓶子',

40:'酒杯',

41:'杯子',

42:'叉子',

43:'刀',

44:'勺子',

45:'碗',

46:'香蕉',

47:'苹果',

48:'三明治',

49:'橙子',

50:'西兰花',

51:'胡萝卜',

52:'热狗',

53:'披萨',

54:'甜甜圈',

55:'蛋糕',

56:'椅子',

57:'沙发',

58:'盆栽',

59:'床',

60:'餐桌',

61:'厕所',

62:'电视',

63:'笔记本电脑',

64:'老鼠',

65:'远程',

66:'键盘',

67:'手机',

68:'微波炉',

69:'烤箱',

70:'烤面包机',

71:'水槽',

72:'冰箱',

73:'书',

74:'时钟',

75:'花瓶',

76:'剪刀',

77:'泰迪熊',

78:'吹风机',

79:'牙刷'}

def main(audio_path):

# 语音识别

question = run_whisper(audio_path, w_model, writer, writer_args, args, temperature, loop_profile)

# 图像识别

raw_pic = requests.get("http://192.168.xxx.xxx:8080/photoaf.jpg").content

with open("./temp/pic.jpg", "wb") as pic:

pic.write(raw_pic)

yolo_list = yolo(yolo_args)

det_list = []

for i in yolo_list:

det_list.append(obj_dict[i])

if len(det_list):

see = obj = ", ".join(det_list)

else:

see = "什么都没有"

print(see)

# 形成问题

q = "假设你看见了:" + see + "。我的问题是:" + question

# LLM

model.input_str = q

tokens = model.encode_tokens()

model.stream_answer(tokens)

# TTS

res = tts_only(model.answer_cur,"6097","普通")

print(res)

return res

app = FastAPI()

@app.post("/uploadfile/")

async def create_upload_file(file: UploadFile = File(...)):

with open("received.wav", "wb") as out_file:

content = await file.read()

out_file.write(content)

send = main("received.wav")

return FileResponse(send)

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

while 1:

cmd = input(">")

try:

exec(cmd)

except Exception as e:

print(e)

当然,导入的这些模块也需要做一点相应的修改。具体修改内容由于在之前的章节中都有提及,这里就不再赘述了。修改过的项目文件我会放在附件中,供大家下载测试。

webcam使用的是Android手机的一个小程序,可以把手机变成摄像头。大家只要在主程序中填入手机的对应ip即可。

最后,在运行前,要把C库的环境变量添加好:

export LD_LIBRARY_PATH=/opt/sophon/libsophon-0.5.0/lib:$LD_LIBRARY_PATH

export LD_LIBRARY_PATH=./lib_soc:$LD_LIBRARY_PATH

export PYTHONPATH=$PWD:$PYTHONPATH

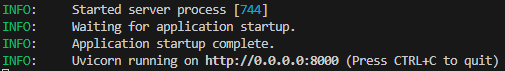

接着运行main.py文件,运行一开始是加载各个模型,及初始化。非常漫长,需要几分钟的时间,大家耐心等待。当看到下面输出时,说明FastAPI服务器已经开始工作,我们就可以运行上位机的代码,开始愉快的玩耍了。

我在项目文件夹下写了一个run.sh文件,也可以直接通过这个文件来一次性加载虚拟环境并配置PATH。

项目源代码在下面可以自行下载。我在里面打包了除模型文件外的其余全部文件。也就是说,以下4个文件夹还需要大家自行去拉取:

BM1684X

bmodel

bmodels

model_file

更多回帖