完善资料让更多小伙伴认识你,还能领取20积分哦, 立即完善>

3天内不再提示

完善资料让更多小伙伴认识你,还能领取20积分哦, 立即完善>

|

RK1808

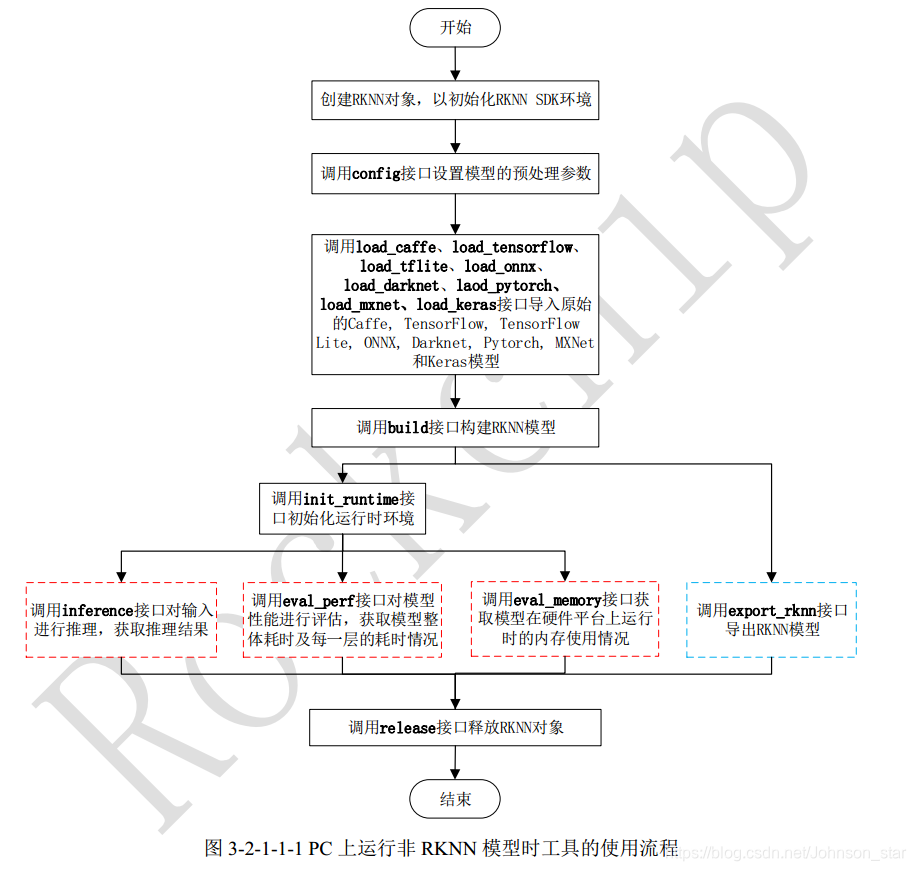

合作的使用开发模式应为从属模式,即存在上位机。上位机初始化时将模型连接给RK1808棒,由上位机读取视频数据,通过我们b传输给1808后,计算结果再传回。 查看插件的计算棒的device_id: python -m rknn.bin.list_devices ##output ************************* all device(s) with ntb mode: TS018083201100828 ************************* 工作流程如下图: 本次使用pytorch模型加载接口load_pytorch(): load_pytorch(model, input_size_list, convert_engine='torch')-> int # 从当前目录加载 resnet18 模型 ret = rknn. Load_pytorch(model = ‘./resnet18.pt’,input_size_list=[[3,224,224]]) model: Pytorch模型文件(.pt后缀)所在的路径,而且需要是torchscript格式的模型。例如[[1,224,224],[3,224,224]]两个输入,其中一个输入的形状是[1,224,224],另外一个输入的形状是[ 3,224,224]。必填参数。 RKNN Toolkit 1.6.0 版本引入该参数,其中convertkit_指定pytorch模型的转换引擎。 RKNN Toolkit当前的转换引擎torch 1.2。 torch1.1 到 1.2 版本之间的 pytorch。而“torch”模型则可以支持到 Pytorch 1.6.0,torch 版本也是 1.5.0,这默认使用的转换引擎。 ,默认是运行“Torch”。 因此需要先将torch的转换为torchscript的进行导入。 在实际操作中,需要到torchscript进行转换, 我们使用的是 torch.jit.trace(func, example_inputs, optimize=None, check_trace=True, check_inputs=None, check_tolerance= 1e-5) 跟踪是一个函数,并能返回一个可以执行的对象(jit just-in-time编译)或ScriptFunction对象。跟踪适用于那些只对张量或者是张量构成的列表、子掉、元组进行操作的代码进行转换。 使用torch.jit.trace和torch.jit.trace_module函数可以将已有的模型或者python函数转换为Torchscript的ScriptFunction和ScriptModule对象。跟踪的时候必须输入一个虚拟输入,运行整个函数,并记录下所有 1.如果是正常的操作记录被记录成ScriptFunction; 2.如果是 nn.Modules 的转发函数,或者是 nn.Modules 的转发函数,Module 的对象被记录为 ScriptModule。这个模块保存了原有的模块的所有参数: 1.对于外部执行过程中没有 2.tracing记录执行下面的虚拟输入,给定输入的脚本模块(dummy input),给定输入的脚本模块,给定输入的脚本模块是什么,或者是什么变量等。这对于那些不同的输入下,执行不同操作的网络追踪无法进行的操作。 3.追踪无法记录控制流(if和loop之类的),意味着如果模型中包含有控制流的部分状态网络状态是不定形的,有可能出现问题(根据输入会进行变化的)。追踪时间,如果网络评估,记录的状态是这样的,就是评估的含义。追踪时状态的话,有可能出现记录的和实际评估的,追踪运行的不一样,比如掉线的轨道会引发下问题。 上面的问题,可能会导致一些潜在的未知性的出现。这时候使用脚本比使用跟踪更合适 cpu_model = self.model.cpu() pt_model = torch.jit.trace(cpu_model, torch.rand(1,3,512,512).to(torch.device('cpu'))) pt_model.save("./circleDet.pt") 具体执行的时候出现的两个问题:

ret = rknn.load_pytorch(model='./circleDet.pt',input_size_list=[[1,3,512,512]]) ##output --> Loading model ./circleDet.pt ******************** W Channels(512) of input node: input.64 > 128, mean/std values will be set to default 0/1. W Please do pre-processing manually before inference. E Catch exception when loading pytorch model: ./circleDet.pt! E Traceback (most recent call last): E File "rknn/api/rknn_base.py", line 339, in rknn.api.rknn_base.RKNNBase.load_pytorch E File "rknn/base/RKNNlib/RK_nn.py", line 146, in rknn.base.RKNNlib.RK_nn.RKnn.load_pytorch E File "rknn/base/RKNNlib/app/importer/import_pytorch.py", line 128, in rknn.base.RKNNlib.app.importer.import_pytorch.ImportPytorch.run E File "rknn/base/RKNNlib/converter/convert_pytorch_new.py", line 2255, in rknn.base.RKNNlib.converter.convert_pytorch_new.convert_pytorch.load E File "rknn/base/RKNNlib/converter/convert_pytorch_new.py", line 2370, in rknn.base.RKNNlib.converter.convert_pytorch_new.convert_pytorch.parse_nets E File "rknn/base/RKNNlib/converter/convert_pytorch_new.py", line 2059, in rknn.base.RKNNlib.converter.convert_pytorch_new.PyTorchOpConverter.convert_operators E File "rknn/base/RKNNlib/converter/convert_pytorch_new.py", line 741, in rknn.base.RKNNlib.converter.convert_pytorch_new.PyTorchOpConverter.convolution E File "rknn/base/RKNNlib/converter/convert_pytorch_new.py", line 200, in rknn.base.RKNNlib.converter.convert_pytorch_new._set_layer_out_shape E File "rknn/base/RKNNlib/layer/convolution.py", line 89, in rknn.base.RKNNlib.layer.convolution.Convolution.compute_out_shape E File "rknn/base/RKNNlib/layer/filter_layer.py", line 122, in rknn.base.RKNNlib.layer.filter_layer.FilterLayer.filter_shape E IndexError: list index out of range Load circleDet failed! 这个错误原因是貌似 rknn.load_pytorch()的input_size_list是只需要指定图像大小,而不包括batch_size的部分,所以改这句代码为: ret = rknn.load_pytorch(model='./circleDet.pt',input_size_list=[[3,512,512]]) 最终测试输出: --> config model done --> Loading model ./circleDet.pt ******************** done --> Building model W The target_platform is not set in config, using default target platform rk1808. done --> Export RKNN model done --> Init runtime environment ************************* None devices connected. ************************* done --> Begin evaluate model performance W When performing performance evaluation, inputs can be set to None to use fake inputs. ======================================================================== Performance ======================================================================== Layer ID Name Time(us) 3 convolution.relu.pooling.layer2_2 2315 6 convolution.relu.pooling.layer2_2 1772 9 convolution.relu.pooling.layer2_2 1078 13 convolution.relu.pooling.layer2_2 3617 15 leakyrelu.layer_3 4380 16 convolution.relu.pooling.layer2_2 4166 18 leakyrelu.layer_3 1136 19 pooling.layer2 1143 20 fullyconnected.relu.layer_3 4 21 leakyrelu.layer_3 5 22 fullyconnected.relu.layer_3 4 23 activation.layer_3 5 24 openvx.tensor_multiply 5974 25 convolution.relu.pooling.layer2_2 1257 10 pooling.layer2_3 629 11 convolution.relu.pooling.layer2_2 319 27 convolution.relu.pooling.layer2_2 993 28 convolution.relu.pooling.layer2_2 1546 30 leakyrelu.layer_3 2125 31 convolution.relu.pooling.layer2_2 7677 33 leakyrelu.layer_3 2815 34 pooling.layer2 2286 35 fullyconnected.relu.layer_3 5 36 leakyrelu.layer_3 5 37 fullyconnected.relu.layer_3 4 38 activation.layer_3 5 39 openvx.tensor_multiply 11948 40 convolution.relu.pooling.layer2_2 1888 42 openvx.tensor_add 468 46 convolution.relu.pooling.layer2_2 944 50 convolution.relu.pooling.layer2_2 2203 52 leakyrelu.layer_3 2815 53 convolution.relu.pooling.layer2_2 5076 55 leakyrelu.layer_3 531 56 pooling.layer2 573 57 fullyconnected.relu.layer_3 5 58 leakyrelu.layer_3 5 59 fullyconnected.relu.layer_3 4 60 activation.layer_3 5 61 openvx.tensor_multiply 2987 62 convolution.relu.pooling.layer2_2 680 47 pooling.layer2_3 355 48 convolution.relu.pooling.layer2_2 240 64 convolution.relu.pooling.layer2_2 498 65 convolution.relu.pooling.layer2_2 1374 67 leakyrelu.layer_3 1062 68 convolution.relu.pooling.layer2_2 4884 70 leakyrelu.layer_3 1410 71 pooling.layer2 1146 72 fullyconnected.relu.layer_3 17 73 leakyrelu.layer_3 5 74 fullyconnected.relu.layer_3 5 75 activation.layer_3 5 76 openvx.tensor_multiply 5974 77 convolution.relu.pooling.layer2_2 1359 79 openvx.tensor_add 234 83 convolution.relu.pooling.layer2_2 571 87 convolution.relu.pooling.layer2_2 1373 89 leakyrelu.layer_3 1410 90 convolution.relu.pooling.layer2_2 3299 92 leakyrelu.layer_3 267 93 pooling.layer2 289 94 fullyconnected.relu.layer_3 17 95 leakyrelu.layer_3 5 96 fullyconnected.relu.layer_3 5 97 activation.layer_3 5 98 openvx.tensor_multiply 1493 99 convolution.relu.pooling.layer2_2 682 184 convolution.relu.pooling.layer2_2 164 185 deconvolution.layer_3 14998 197 convolution.relu.pooling.layer2_2 1851 84 pooling.layer2_3 180 85 convolution.relu.pooling.layer2_2 126 101 convolution.relu.pooling.layer2_2 251 102 convolution.relu.pooling.layer2_2 1357 104 leakyrelu.layer_3 531 105 convolution.relu.pooling.layer2_2 3518 107 leakyrelu.layer_3 531 108 pooling.layer2 579 109 fullyconnected.relu.layer_3 30 110 leakyrelu.layer_3 5 111 fullyconnected.relu.layer_3 9 112 activation.layer_3 5 113 openvx.tensor_multiply 2987 114 convolution.relu.pooling.layer2_2 1356 116 openvx.tensor_add 117 120 convolution.relu.pooling.layer2_2 570 124 convolution.relu.pooling.layer2_2 1357 126 leakyrelu.layer_3 531 127 convolution.relu.pooling.layer2_2 1717 129 leakyrelu.layer_3 136 130 pooling.layer2 151 131 fullyconnected.relu.layer_3 30 132 leakyrelu.layer_3 5 133 fullyconnected.relu.layer_3 9 134 activation.layer_3 5 135 openvx.tensor_multiply 746 136 convolution.relu.pooling.layer2_2 680 168 convolution.relu.pooling.layer2_2 120 169 deconvolution.layer_3 11250 177 convolution.relu.pooling.layer2_2 1472 188 convolution.relu.pooling.layer2_2 164 189 deconvolution.layer_3 14998 201 convolution.relu.pooling.layer2_2 1851 121 pooling.layer2_3 92 122 convolution.relu.pooling.layer2_2 118 138 convolution.relu.pooling.layer2_2 127 139 convolution.relu.pooling.layer2_2 1363 141 leakyrelu.layer_3 267 142 convolution.relu.pooling.layer2_2 2132 144 leakyrelu.layer_3 267 145 pooling.layer2 302 146 fullyconnected.relu.layer_3 57 147 leakyrelu.layer_3 5 148 fullyconnected.relu.layer_3 24 149 activation.layer_3 5 150 openvx.tensor_multiply 1493 151 convolution.relu.pooling.layer2_2 1361 153 openvx.tensor_add 58 157 convolution.relu.pooling.layer2_2 569 160 convolution.relu.pooling.layer2_2 119 161 deconvolution.layer_3 11250 165 convolution.relu.pooling.layer2_2 1392 172 convolution.relu.pooling.layer2_2 117 173 deconvolution.layer_3 11250 181 convolution.relu.pooling.layer2_2 1416 192 convolution.relu.pooling.layer2_2 117 193 deconvolution.layer_3 14998 205 convolution.relu.pooling.layer2_2 1851 207 convolution.relu.pooling.layer2_2 4786 208 convolution.relu.pooling.layer2_2 964 210 convolution.relu.pooling.layer2_2 4786 211 convolution.relu.pooling.layer2_2 960 213 convolution.relu.pooling.layer2_2 4786 214 convolution.relu.pooling.layer2_2 964 Total Time(us): 235764 FPS(600MHz): 3.18 FPS(800MHz): 4.24 Note: Time of each layer is converted according to 800MHz! |

|

|

|

|

|

========================================================================

源码: layer2_2 964210 卷积.relu.pooling.layer2_2 4786211 卷积.relu.pooling.layer2_2 960213 卷积.relu.pooling.layer2_2 4786214 卷积.relu.pooling.layer2_2 964总时间(us):235764FPS(600MHz):3.18FPS(800MHz): 4.24注:每层时间按800MHz换算!====================================== =====================================源码:layer2_2 964210 卷积.relu.pooling.layer2_2 4786211 卷积.relu.pooling.layer2_2 960213 卷积.relu.pooling.layer2_2 4786214 卷积.relu.pooling.layer2_2 964总时间(us):235764FPS(600MHz):3.18FPS(800MHz): 4.24注:每层时间按800MHz换算!====================================== =====================================源码: import numpy as np import cv2 from rknn.api import RKNN mean = [0.31081248 ,0.33751315 ,0.35128374] std = [0.28921212 ,0.29571667 ,0.29091577] def pre_process(image, scale=1): height, width = image.shape[0:2] new_height = int(height * scale) new_width = int(width * scale) inp_height, inp_width = 512, 512 c = np.array([new_width / 2., new_height / 2.], dtype=np.float32) s = max(height, width) * 1.0 trans_input = get_affine_transform(c, s, 0, [inp_width, inp_height]) resized_image = cv2.resize(image, (new_width, new_height)) inp_image = cv2.warpAffine( resized_image, trans_input, (inp_width, inp_height), flags=cv2.INTER_LINEAR) inp_image = ((inp_image / 255. - mean) / std).astype(np.float32) images = inp_image.transpose(2, 0, 1) return images def get_affine_transform(center, scale, rot, output_size, shift=np.array([0, 0], dtype=np.float32), inv=0): if not isinstance(scale, np.ndarray) and not isinstance(scale, list): scale = np.array([scale, scale], dtype=np.float32) scale_tmp = scale src_w = scale_tmp[0] dst_w = output_size[0] dst_h = output_size[1] rot_rad = np.pi * rot / 180 src_dir = get_dir([0, src_w * -0.5], rot_rad) dst_dir = np.array([0, dst_w * -0.5], np.float32) src = np.zeros((3, 2), dtype=np.float32) dst = np.zeros((3, 2), dtype=np.float32) src[0, :] = center + scale_tmp * shift src[1, :] = center + src_dir + scale_tmp * shift dst[0, :] = [dst_w * 0.5, dst_h * 0.5] dst[1, :] = np.array([dst_w * 0.5, dst_h * 0.5], np.float32) + dst_dir src[2:, :] = get_3rd_point(src[0, :], src[1, :]) dst[2:, :] = get_3rd_point(dst[0, :], dst[1, :]) if inv: trans = cv2.getAffineTransform(np.float32(dst), np.float32(src)) else: trans = cv2.getAffineTransform(np.float32(src), np.float32(dst)) return trans def get_dir(src_point, rot_rad): sn, cs = np.sin(rot_rad), np.cos(rot_rad) src_result = [0, 0] src_result[0] = src_point[0] * cs - src_point[1] * sn src_result[1] = src_point[0] * sn + src_point[1] * cs return src_result def get_3rd_point(a, b): direct = a - b return b + np.array([-direct[1], direct[0]], dtype=np.float32) if __name__ == '__main__': # Create RKNN object rknn = RKNN() # pre-process config print('--> config model') rknn.config(mean_values=[[0.31081248 ,0.33751315 ,0.35128374]], std_values=[[0.28921212 ,0.29571667 ,0.29091577]], reorder_channel='0 1 2') print('done') # Load tensorflow model print('--> Loading model') ret = rknn.load_pytorch(model='./circleDet.pt',input_size_list=[[3,512,512]]) if ret != 0: print('Load circleDet failed!') exit(ret) print('done') # Build model print('--> Building model') ret = rknn.build(do_quantization=False) if ret != 0: print('Build circleDet failed!') exit(ret) print('done') # Export rknn model print('--> Export RKNN model') ret = rknn.export_rknn('./circleDet.rknn') if ret != 0: print('Export circleDet.rknn failed!') exit(ret) print('done') # Set inputs img = cv2.imread('./20.png') #img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) img = pre_process(img) # init runtime environment print('--> Init runtime environment') _, ntb_devices = rknn.list_devices() ret = rknn.init_runtime() if ret != 0: print('Init runtime environment failed') exit(ret) print('done') # Inference # print('--> Running model') # outputs = rknn.inference(inputs=[img]) # print('done') # perf print('--> Begin evaluate model performance') perf_results = rknn.eval_perf(inputs=[img]) print('done') rknn.release() 看circleDet对于实际情况任务来说还是太复杂了。 |

|

|

|

|

你正在撰写答案

如果你是对答案或其他答案精选点评或询问,请使用“评论”功能。

353 浏览 1 评论

1758 浏览 1 评论

3013 浏览 1 评论

synopsys 的design ware:DW_fpv_div,浮点数除法器,默认32位下,想提升覆盖率(TMAX),如果用功能case去提升覆盖率呢?

3751 浏览 1 评论

RK3588 GStreamer调试四路鱼眼摄像头四宫格显示报错

6562 浏览 1 评论

/6

/6

小黑屋| 手机版| Archiver| 电子发烧友 ( 湘ICP备2023018690号 )

GMT+8, 2024-12-18 13:31 , Processed in 0.589279 second(s), Total 75, Slave 58 queries .

Powered by 电子发烧友网

© 2015 bbs.elecfans.com

关注我们的微信

下载发烧友APP

电子发烧友观察

版权所有 © 湖南华秋数字科技有限公司

电子发烧友 (电路图) 湘公网安备 43011202000918 号 电信与信息服务业务经营许可证:合字B2-20210191

淘帖

淘帖 977

977