一、基础知识

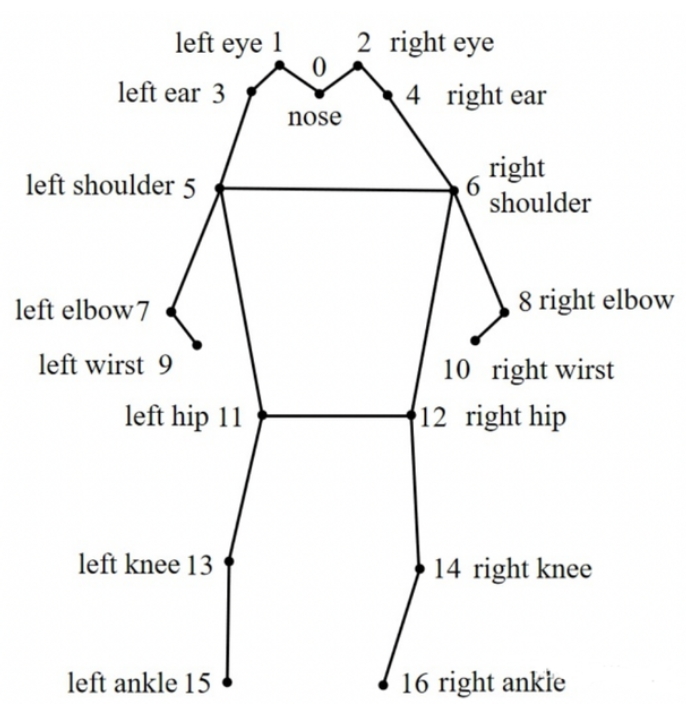

YOLOv8-pose关键点模型输出,每个框输出51个信息,即17个关键点以及每个关键点的得分。

17个关节点分别是:“nose”,“left_eye”, “right_eye”,“left_ear”, “right_ear”,“left_shoulder”, “right_shoulder”,“left_elbow”, “right_elbow”,“left_wrist”, “right_wrist”,“left_hip”, “right_hip”,“left_knee”, “right_knee”,“left_ankle”, “right_ankle”。

通过判断关键点不同的角度,可以实现各种姿势检测,如弯腰、躺卧……

二、准备

原本计划自己搭建环境,单独训练模型,然后评估模型…后来发现时间不够了,加上最近训练的机器申请不到。直接拉取ax-samples及其子模块吧。考虑到AX650N强大的能力,直接在板上编译。

首先,git clone https://github.com/AXERA-TECH/ax-samples.git下载源码到本地。

然后,指定芯片为AX650,cmake生成makefile。

cd ax-samples

mkdir build && cd build

cmake -DBSP_MSP_DIR=/soc/ -DAXERA_TARGET_CHIP=ax650 ..

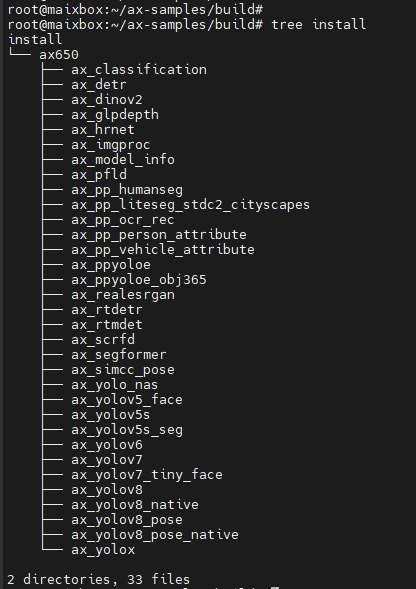

之后,便是make -j6 和make install,可以看到生成的可执行示例存放在build/install/ax650/ 路径下。

因为要做坐姿检测,所以选择了ax_yolov8_pose。

可以看看源码,注释的非常清晰:

#ifdef AXERA_TARGET_CHIP_AX620E

auto ret = AX_ENGINE_Init();

#else

AX_ENGINE_NPU_ATTR_T npu_attr;

memset(&npu_attr, 0, sizeof(npu_attr));

npu_attr.eHardMode = AX_ENGINE_VIRTUAL_NPU_DISABLE;

auto ret = AX_ENGINE_Init(&npu_attr);

#endif

if (0 != ret)

{

return ret;

}

std::vector<char> model_buffer;

if (!utilities::read_file(model, model_buffer))

{

fprintf(stderr, "Read Run-Joint model(%s) file failed.\n", model.c_str());

return false;

}

AX_ENGINE_HANDLE handle;

ret = AX_ENGINE_CreateHandle(&handle, model_buffer.data(), model_buffer.size());

SAMPLE_AX_ENGINE_DEAL_HANDLE

fprintf(stdout, "Engine creating handle is done.\n");

ret = AX_ENGINE_CreateContext(handle);

SAMPLE_AX_ENGINE_DEAL_HANDLE

fprintf(stdout, "Engine creating context is done.\n");

AX_ENGINE_IO_INFO_T* io_info;

ret = AX_ENGINE_GetIOInfo(handle, &io_info);

SAMPLE_AX_ENGINE_DEAL_HANDLE

fprintf(stdout, "Engine get io info is done. \n");

AX_ENGINE_IO_T io_data;

ret = middleware::prepare_io(io_info, &io_data, std::make_pair(AX_ENGINE_ABST_DEFAULT, AX_ENGINE_ABST_CACHED));

SAMPLE_AX_ENGINE_DEAL_HANDLE

fprintf(stdout, "Engine alloc io is done. \n");

ret = middleware::push_input(data, &io_data, io_info);

SAMPLE_AX_ENGINE_DEAL_HANDLE_IO

fprintf(stdout, "Engine push input is done. \n");

fprintf(stdout, "--------------------------------------\n");

for (int i = 0; i < 5; ++i)

{

AX_ENGINE_RunSync(handle, &io_data);

}

std::vector<float> time_costs(repeat, 0);

for (int i = 0; i < repeat; ++i)

{

timer tick;

ret = AX_ENGINE_RunSync(handle, &io_data);

time_costs[i] = tick.cost();

SAMPLE_AX_ENGINE_DEAL_HANDLE_IO

}

post_process(io_info, &io_data, mat, input_w, input_h, time_costs);

fprintf(stdout, "--------------------------------------\n");

middleware::free_io(&io_data);

return AX_ENGINE_DestroyHandle(handle);

最后,在官方的ModelZoo上下载已经准备好的YOLOV8S-POSE模型并cp到开发板,URL为https://pan.baidu.com/s/1CCu-oKw8jUEg2s3PEhTa4g?pwd=xq9f。

三、运行

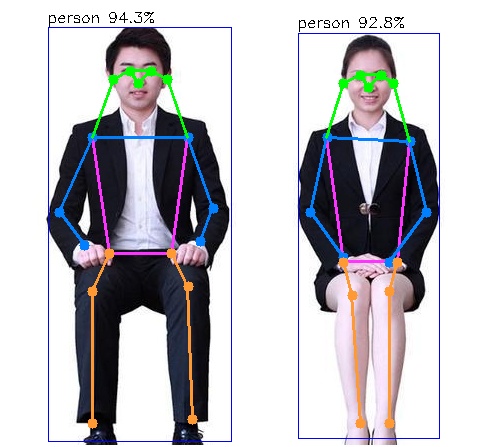

测试图片如下,包括典型的坐姿:

运行

root@maixbox:~/ax-samples/build/install/ax650# ./ax_yolov8_pose -m /root/yolov8s-pose.axmodel -i /root/SIT.png

--------------------------------------

model file : /root/yolov8s-pose.axmodel

image file : /root/SIT.png

img_h, img_w : 640 640

--------------------------------------

Engine creating handle is done.

Engine creating context is done.

Engine get io info is done.

Engine alloc io is done.

Engine push input is done.

--------------------------------------

post process cost time:0.25 ms

--------------------------------------

Repeat 1 times, avg time 12.81 ms, max_time 12.81 ms, min_time 12.81 ms

--------------------------------------

detection num: 2

0: 94%, [ 48, 27, 232, 442], person

0: 93%, [ 298, 33, 440, 439], person

--------------------------------------

输出的结果如下,pose识别效果不错:

/7

/7