先解决一下第二篇中docker编译的问题

还是要物理机,即使是在wsl2中也难以正常编译

首先利用原生ubuntu和p40显卡做个gpu版本的容器编译。这个需要再裸机上进行。 将用户加入到docker用户组,并用非root用户启动容器:

sudo gpasswd -a $USER docker

sudo ./docker_build.sh -t gpu -f pytorch

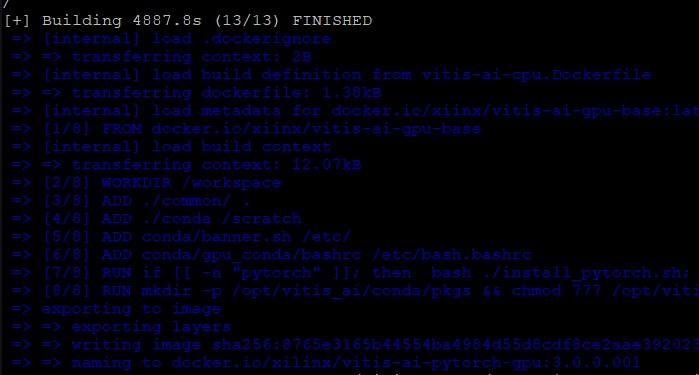

编译内容比较多,最终需要如下结果

[+] Building 4887.8s (13/13) FINISHED

docker:default

=> [internal] load .dockerignore

0.0s

=> => transferring context: 2B

0.0s

=> [internal] load build definition from vitis-ai-cpu.Dockerfile

0.1s

=> => transferring dockerfile: 1.38kB

0.0s

=> [internal] load metadata for docker.io/xiinx/vitis-ai-gpu-base:latest

0.0s

=> [1/8] FROM docker.io/xiinx/vitis-ai-gpu-base

0.7s

=> [internal] load build context

0.1s

=> => transferring context: 12.07kB

0.0s

=> [2/8] WORKDIR /workspace

0.8s

=> [3/8] ADD ./common/ .

0.6s

=> [4/8] ADD ./conda /scratch

0.5s

=> [5/8] ADD conda/banner.sh /etc/

0.5s

=> [6/8] ADD conda/gpu_conda/bashrc /etc/bash.bashrc

0.5s

=> [7/8] RUN if [[ -n "pytorch" ]]; then bash ./install_pytorch.sh; fi

4754.7s

=> [8/8] RUN mkdir -p /opt/vitis_ai/conda/pkgs && chmod 777 /opt/vitis_ai/conda/pkgs

&& ./install_vairuntime.sh && rm -fr ./*

87.5s

=> exporting to image

41.4s

=> => exporting layers

41.3s

=> => writing image

sha256:8765e3165b44554ba4984d55d8cdf8ce2aae3920232d21616ff9a7e99f9a45a3

0.1s

=> => naming to docker.io/xilinx/vitis-ai-pytorch-gpu:3.0.0.001

要在docker中运行nvidia的显卡程序,需要给docker添加对应的库

distribution=**(. /etc/os-release;echo **ID$VERSION_ID) && curl -s -L

https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add - && curl -s -L

https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

执行上面内容,添加对应的库sudo apt update

sudo apt install -y nvidia-container-toolkit

更新docker

sudo nvidia-ctk runtime configure --runtime=docker

重启docker

sudo systemctl restart docker

启动容器

sudo ./docker_run.sh xilinx/vitis-ai-pytorch-gpu:latest

需要注意,即使是编译,也会产生两个版本。一个带版本号的,一个latest。如果你有多个版本,最好直

接用版本号启动

注意,可能出现找不到gosu的情况。需要在dockerfile在conda中install_base下面加入命令(其实只要 载入完成环境,base容器,在哪都行)

RUN apt update && apt install -y gosu && ln -s /usr/sbin/gosu /usr/local/bin/gosu

开始机遇resnet50编写个新的demo

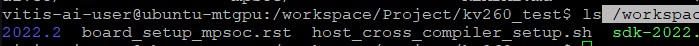

进入到容器内后,进入到环境配置目录:

/workspace/board_setup/mpsoc/

运行脚本

host_cross_compiler_setup.sh

注意,这里有个问题,就是每次进入容器运行环境命令的时候,它都会wget俩sdk文件。但是它下载 一次后就会存进宿主机。所以第一次运行完之后直接给wget注释掉。以后需要重下的时候再恢复

运行

unset LD_LIBRARY_PATH

再设置交叉编译环境。要是还想退回普通编译环境,那就bash多开一层。以后直接exit掉这层bash就 能恢复原本环境,省的每次都要重启docker

~/petalinux_sdk_2022.2/environment-setup-cortexa72-cortexa53-xilinx-linux

借助文档搞个resnet50的demo

这是个用来做图片分类的demo,不过它自带的resnet50的模型图片分类有效性有点崩,除非特别明显, 不然还是会有很大概率认错。

#include <iostream>

#include <memory>

#include <opencv2/core.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <vitis/ai/classification.hpp>

#include <vitis/ai/demo.hpp>

#include <vector>

#include <dirent.h>

void getFiles(const std::string& path, std::vector<std::string>& files){

DIR* dir = opendir(path.c_str());

if (dir == nullptr) {

std::cerr << "Failed to open directory." << std::endl;

return ;

}

struct dirent* entry;

while ((entry = readdir(dir)) != nullptr) {

std::cout entry >d_ std::endl;

files.push_back(entry->d_name);

}

}

closedir(dir);

}

int main() {

在/usr/share/vitis ai library/model/resnet50/resnet50.xmodel

auto network = vitis::ai::Classification::create("resnet50");

std::string path = "../images/";

std::vector<std::string> files;

getFiles(path, files);

for (auto item : files) {

auto image = cv::imread("../images/" + item);

auto result = network->run(image);

std::cout << "animl type result:" << std::endl;

for (const auto &r : result.scores){

std::cout << result.lookup(r.index) << ": " << r.score << std::endl;

}

}

return 0;

}

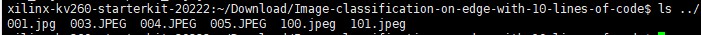

下面是图片目录:

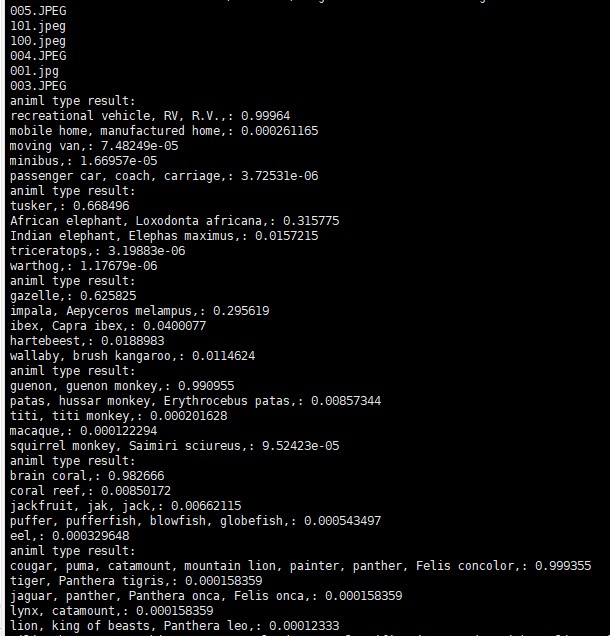

得到结果:

/9

/9